Implementing the feed

Antony Woods

11 Sep 2020

•

5 min read

If you've visited WorksHub, or one of our sites in the last few weeks then hopefully you've noticed that we launched a new landing page and dashboard which revolves around what we call "the feed". In this blog I aim to talk a little bit about how we implemented the feed into our isomorphic Clojure/ClojureScript application (some of which you can see here by the way).

The what now?

Most of us are familiar with the concept of a 'feed' in the digital realm. It's even in the dictionary: "a facility for notifying the user of a blog or other frequently updated website that new content has been added." Splendid. That's exactly what it is.

Under the bonnet

We started out by assessing our options with respect to how we were going to power the feed, and specifically, the first question we always ask is 'are we going to build this ourselves or use an off-the-shelf solution?' Answering this question is complex and there are various factors to consider, including but not limited to:

- Storage - how and where do we store the data?

- Availability - how do we ensure it's always available?

- Performance - how do we serve the data as quickly as possible?

- Resources - how long will it take us to create a solution? do we have the experience in our team to even do it? how much will it cost to buy a solution?

- Security - what are our security obligations? what are the risks and how do we mitigate these?

Answering these questions inevitably spawns more questions, until we're in a kind of giant question tree(!). Cue research, planning, competitor analysis, design and a lot of chin stroking.

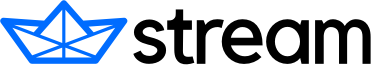

Here at WorksHub we are light on operational resources and so our stack choices bias toward managed services or pieces that are easy to maintain. With this in mind we started with an appraisal of existing, off-the-shelf products and eventually we settled on developing a proof-of-concept using a technology called Stream.

Their website - and they are hiring!

One of their products is literally called 'Feed' and, from their own sales material, "Build scalable activity feeds in hours instead of months. Scale your activity feed without the notorious difficulties involved with building activity feeds on traditional databases."

We did some digging into Stream and the technology that runs their service. Amazingly, Thierry Schellenbach - the CEO of Stream - persists a version on his personal GitHub account. The repository is a gold mine of information relating to the how's and why's of Stream's inner design and workings. For example:

- It's written in Python with Celery - fantastic choices.

- It's backed by Redis and/or Cassandra - both highly regarded data stores.

- The code is well maintained and there is extensive documentation.

We actually spoke to Stream directly about this and they informed us that their actual product

These factors, combined with the knowledge that we could use the repository - code and comprehensive documentation - to answer philosophical questions made Stream a strong option and, after our proof-of-concept was successful we decided to commit to one of their managed packages.

Why not build it ourselves?

At WorksHub we try as hard as possible to run a lean, focused technical function and this hugely influences our decisions about what we build and what we buy. If a commercial solution exists and is within our capacity then that is our preference, because our business is in creating opportunities for software developers, not reinventing the wheel.

Revving the engine

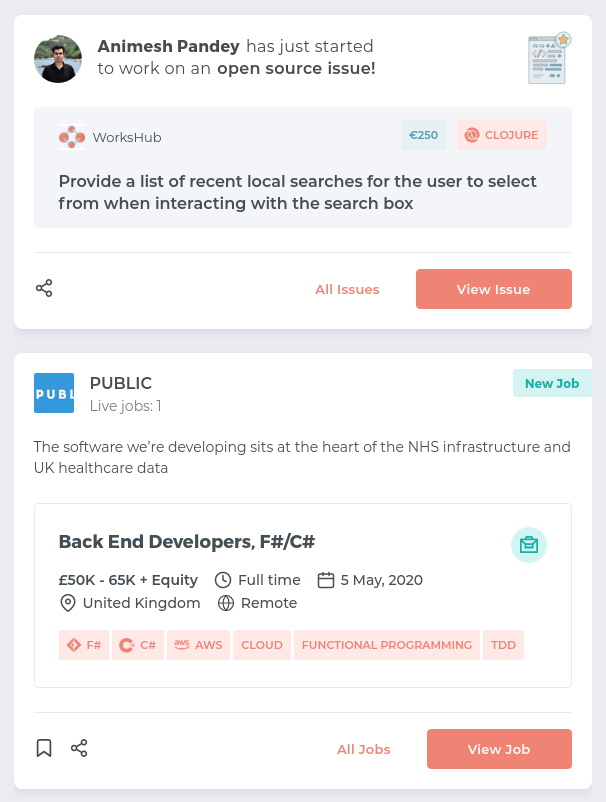

Well, there's a fair amount of code that exists between Stream's service and our frontend. Our backend (written in Clojure) is responsible for generating 'activites' - things that appear on the feed - and relaying those to Stream. From trivial activities, such as 'new job' or 'new article' (possibly where you first learned about this one?), to more complex activities such as deciding whether a job or an issue is 'trending'. We handle all of that ourselves and then, upon request, Stream sends us a paginated, filtered, joined collection of these activities which are destined for either a public landing page, a particular tag page or a user's dashboard. What you get back depends on what exactly you ask for. Internally there are several (hundred) individual 'feeds' which are stitched together - based on certain rules about who's following who - and provided as a single list.

As an example, anything tagged with 'Clojure' is added to a dedicated Clojure feed. If you visit https://functional.works-hub.com/feed?tags=clojure%3Atech you can view this feed in isolation. When you visit the Functional Works landing page however, you see a feed that 'follows' Clojure as well as a bunch of other feeds such as Elixir, Scala, F# etc. Similarly, other landing pages (Javascript, Golang etc) follow feeds that are relevant to them and their respective ecosystems (although there's actually a lot of overlap).

To facilitate our backend communicating with Stream we introduced a new library, Shyvana, which is a wrapper around Stream's own stream-java library. This means we can avoid additional Java interop in our backend...and, in the process make it easier for any other Clojure developers to interact with Stream!

Racing stripes

In case you haven't wandered over to our client repository yet, we use an isomorphic ClojureScript framework called re-frame in order to build the frontend. The benefit of such an approach is that we get all the "good stuff" of single-page applications (modelling, interactivity, dynamism, etc) along with all the "good stuff" of server-side rendered pages (SEO, performance, caching etc). Stay tuned for an article about this in the future!

We use GraphQL (via our own wrapper library, Leona - do you recognise the references?) to retrieve data from the backend. We make an effort handle the data from Stream as little as possible before sending it to the client so as to minimize pressure on our side. We also cache recent results so that we can avoid excessive requests. This all means that whether the request originates from the client as XHR or whether it originates within the server as part of a server-side render, the request handling, the cache coverage and any data transformation is actually performed by the same Clojure code, compiled into two separate environments.

Wrap up

Choosing Stream as a technology to help us deliver this feature was a critical decision and allowed our technical team to focus on the domain problems we were trying to solve - delivering bespoke, dynamic content for software engineers to help them learn, grow and find jobs they want - rather than get bogged down with complex infrastructure and operations. The choice was heavily motivated by our ability to fully understand the Stream product as a result of the fact that it began life as an open source project.

As an honourable mention, using a power combo such as Clojure and ClojureScript allowed us to move very quickly and prove out the assumptions we had made about how the feeds would compose and work with our tag system. The decision to commit to this ecosystem is justified on regular basis.

As far as the end product is concerned I truly hope the results speak for themselves 🎉 and we will continue iterating and improving the feed. In case you have any feedback, please let me know.

WorksHub

Jobs

Locations

Articles

Ground Floor, Verse Building, 18 Brunswick Place, London, N1 6DZ

108 E 16th Street, New York, NY 10003

Subscribe to our newsletter

Join over 111,000 others and get access to exclusive content, job opportunities and more!